Interactive Programming Laboratory (IPLAB) is an interdisciplinary laboratory in the Department of Computer Science at Graduate School of SIE, University of Tsukuba.

Recent Research Projects

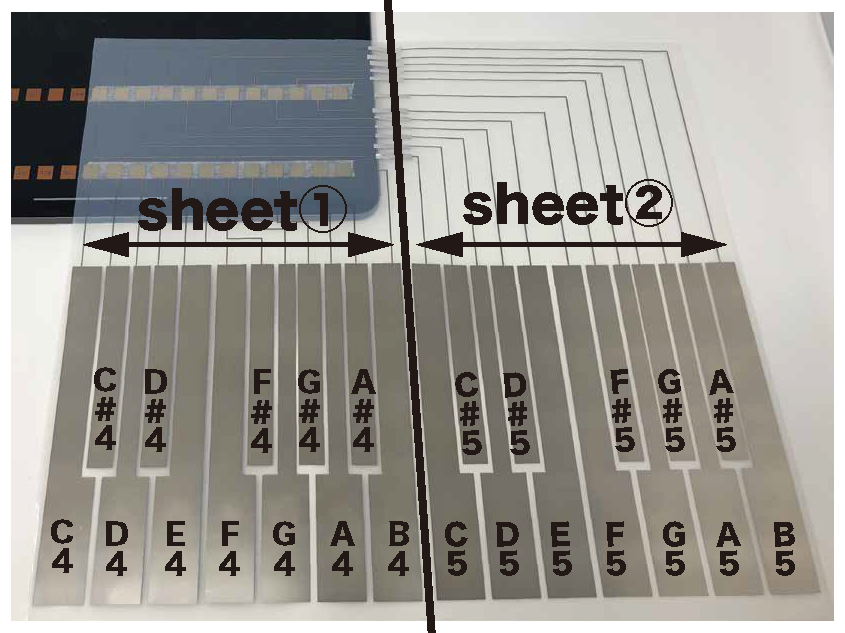

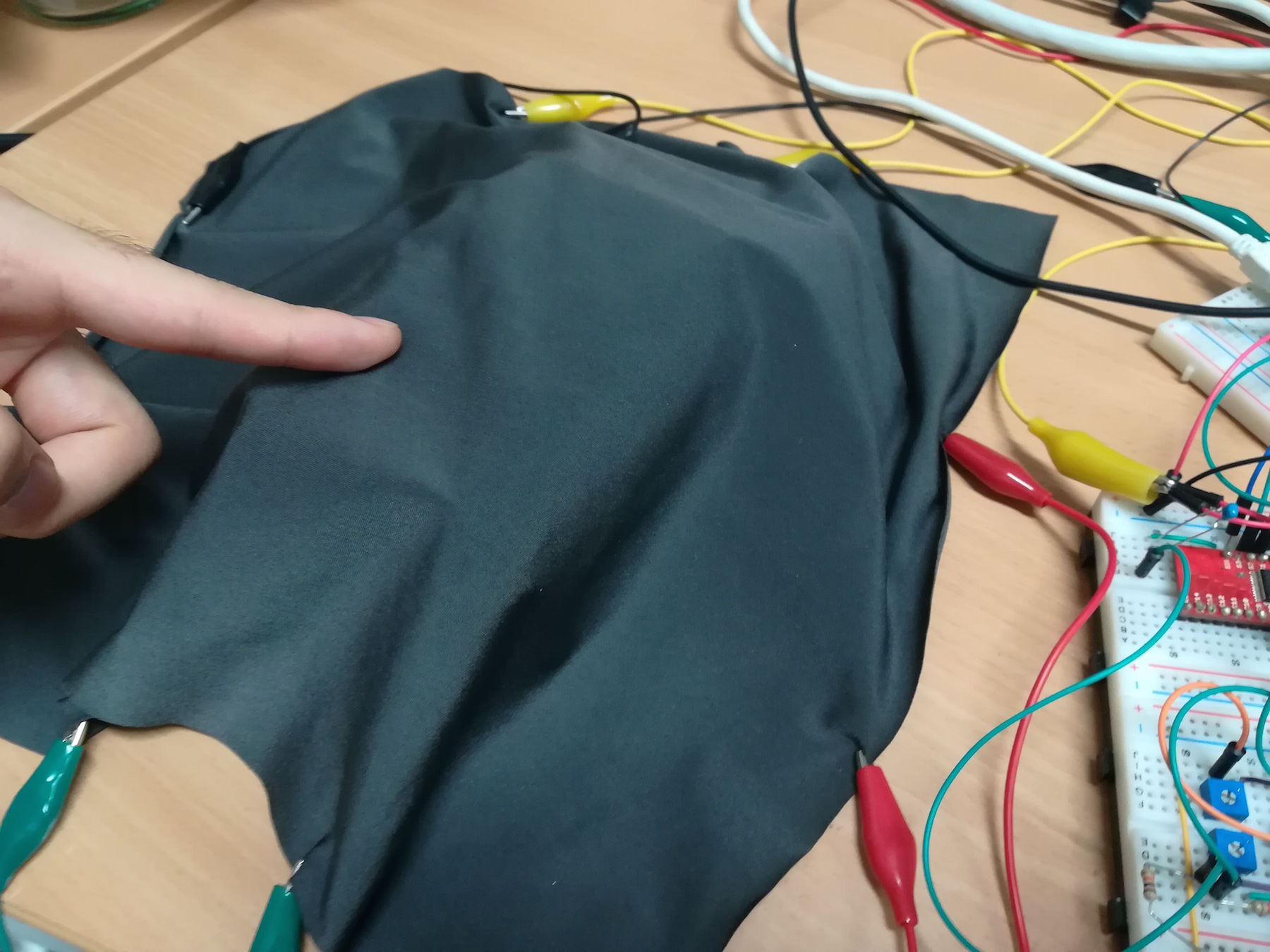

A Fabric Touch Interface that can Detect Touch Positions through Electrical Impedance

We propose a fabric touch interface that can detect touch positions using the change in electrical impedance when touching a conductive fabric to which a current is applied. The user can use the fabric surface like a touch pad, and can perform various interactions. As a prototype, we developed hardware using conductive fabric, electronic circuits on breadboard and ARM mbed, and recognition software using machine learning.

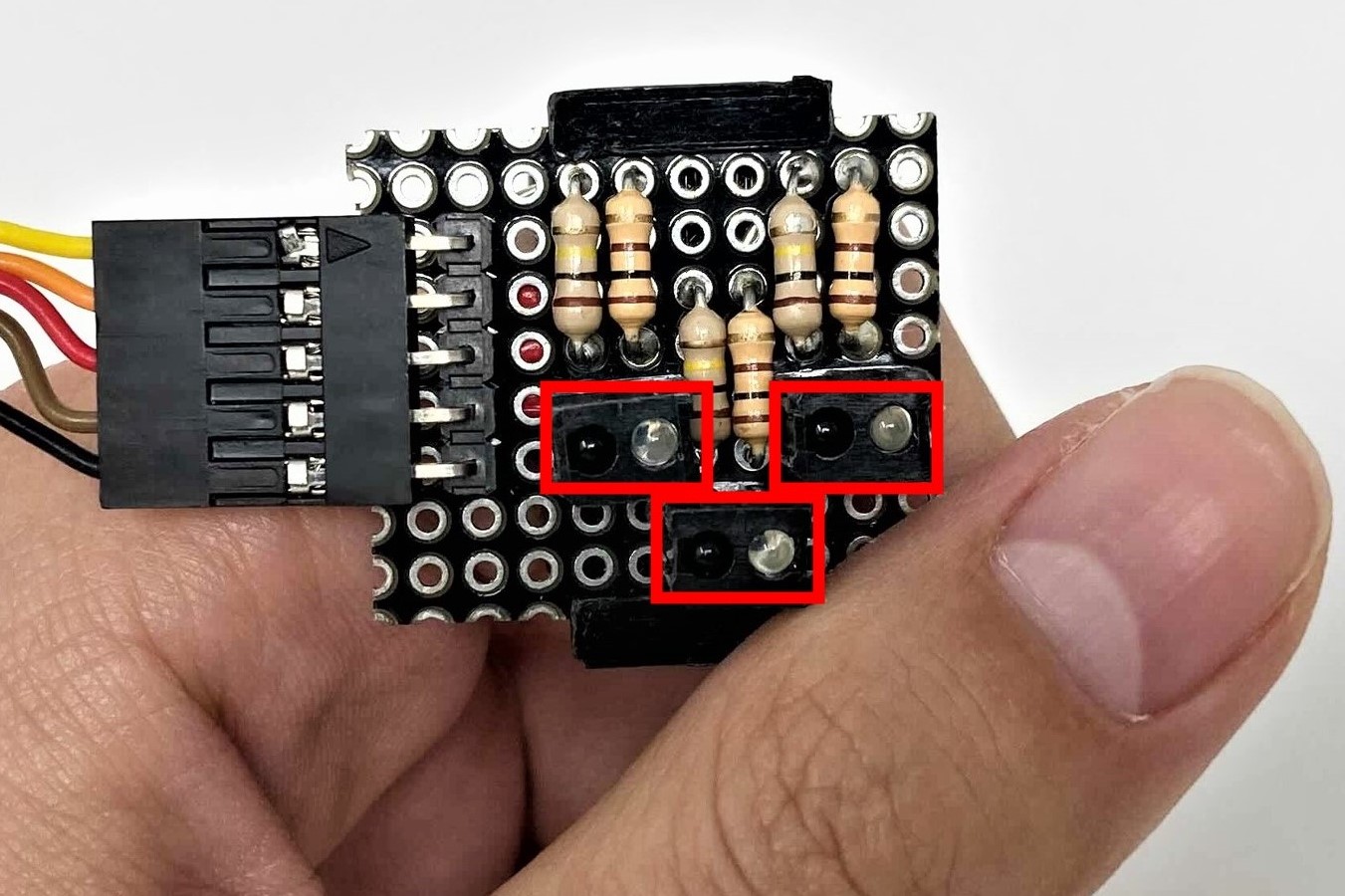

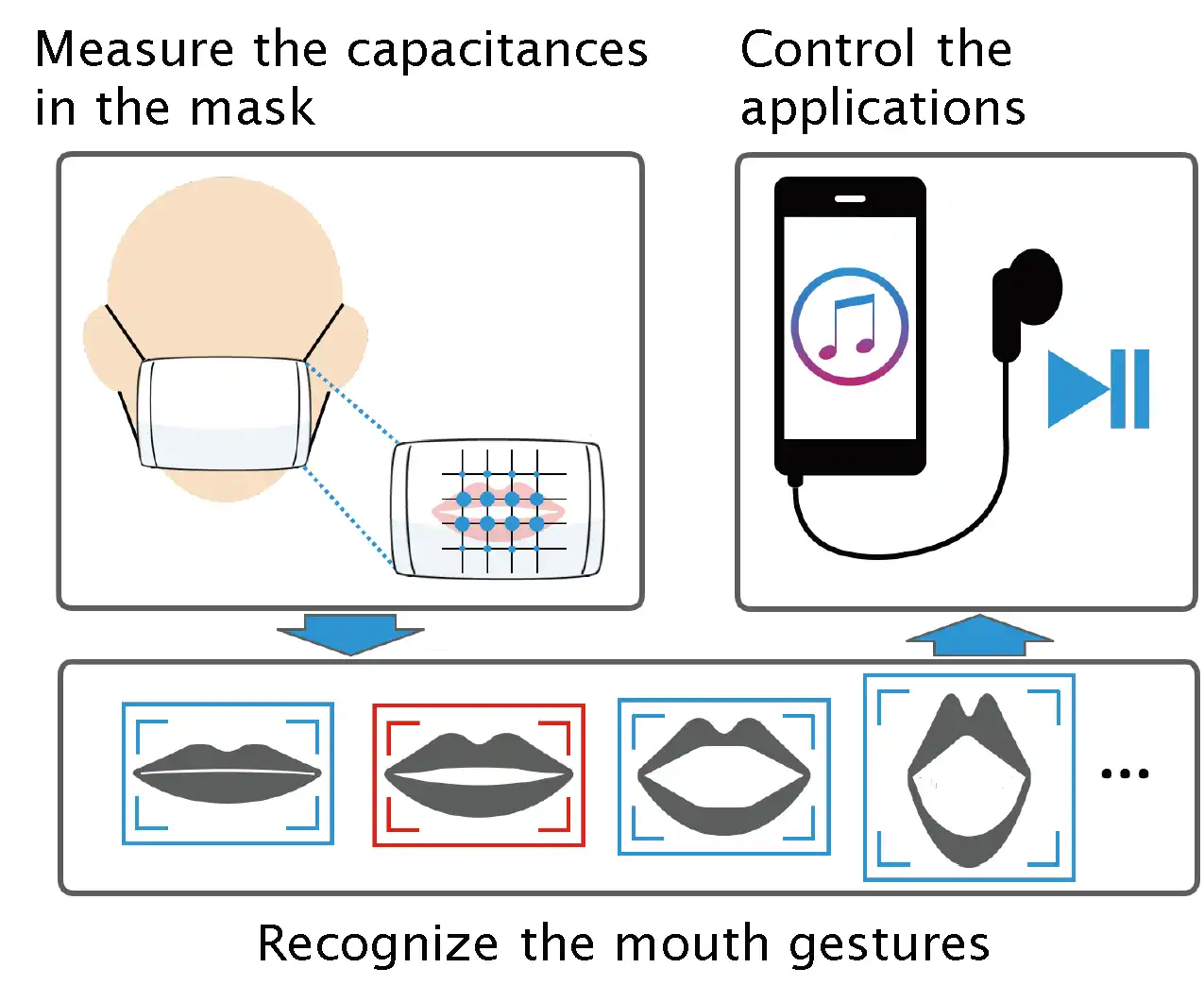

A Mouth Gesture Interface Featuring a Mutual-Capacitance Sensor Embedded in a Surgical Mask

We developed a mouth gesture interface featuring a mutual-capacitance sensor embedded in a surgical mask. This wearable hands-free interface recognizes non-verbal mouth gestures; others cannot eavesdrop on anything the user does with the user’s device. The mouth is hidden by the mask; others do not know what the user is doing. We confirm the feasibility of our approach and demonstrate the accuracy of mouth shape recognition. We present two applications. Mouth shape can be used to zoom in or out, or to select an application from a menu.

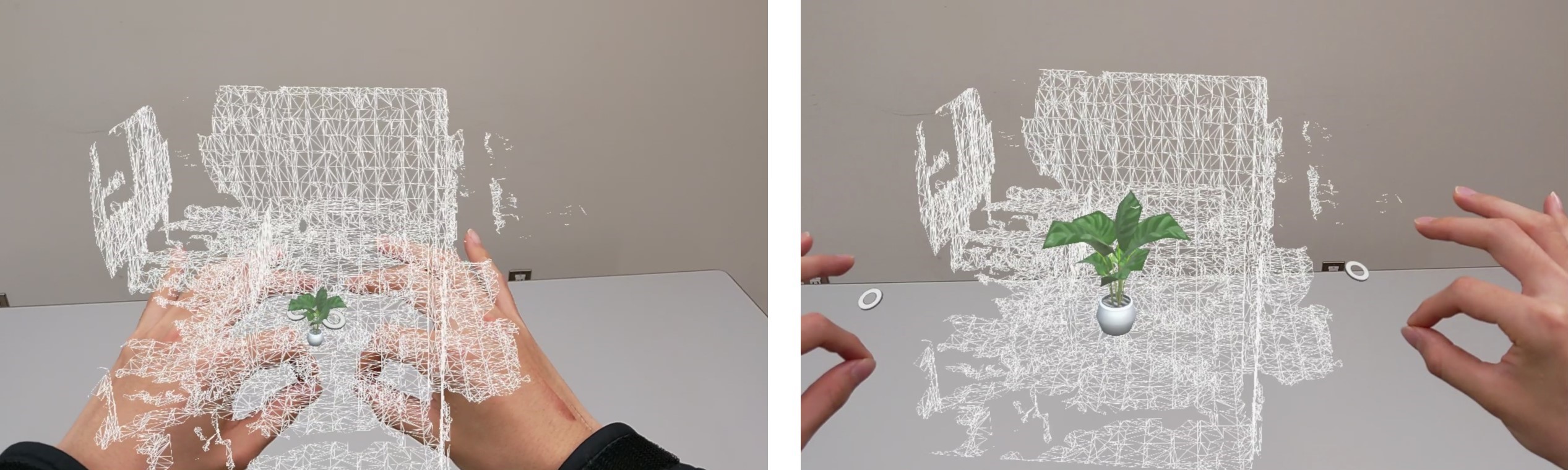

Hand Gesture Interaction with a Low-Resolution Infrared Image Sensor on an Inner Wrist

We propose a hand gesture interaction method using a low-resolution infrared image sensor on an inner wrist. We attach the sensor to the strap of a wrist-worn device, on the palmar side, and apply machine-learning techniques to recognize the gestures made by the opposite hand. As the sensor is placed on the inner wrist, the user can naturally control its direction to reduce privacy invasion. Our method can recognize four types of hand gestures: static hand poses, dynamic hand gestures, finger motion, and the relative hand position. We developed a prototype that does not invade surrounding people's privacy using an 8 x 8 low-resolution infrared image sensor.

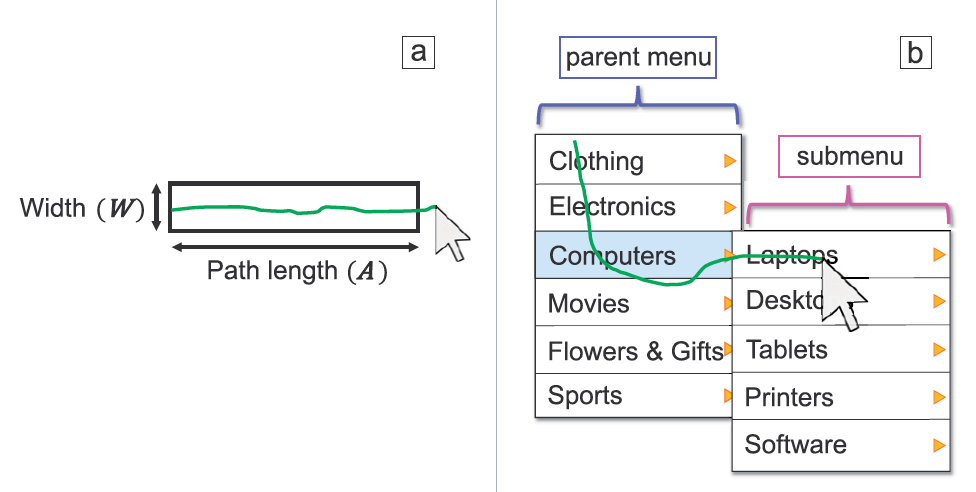

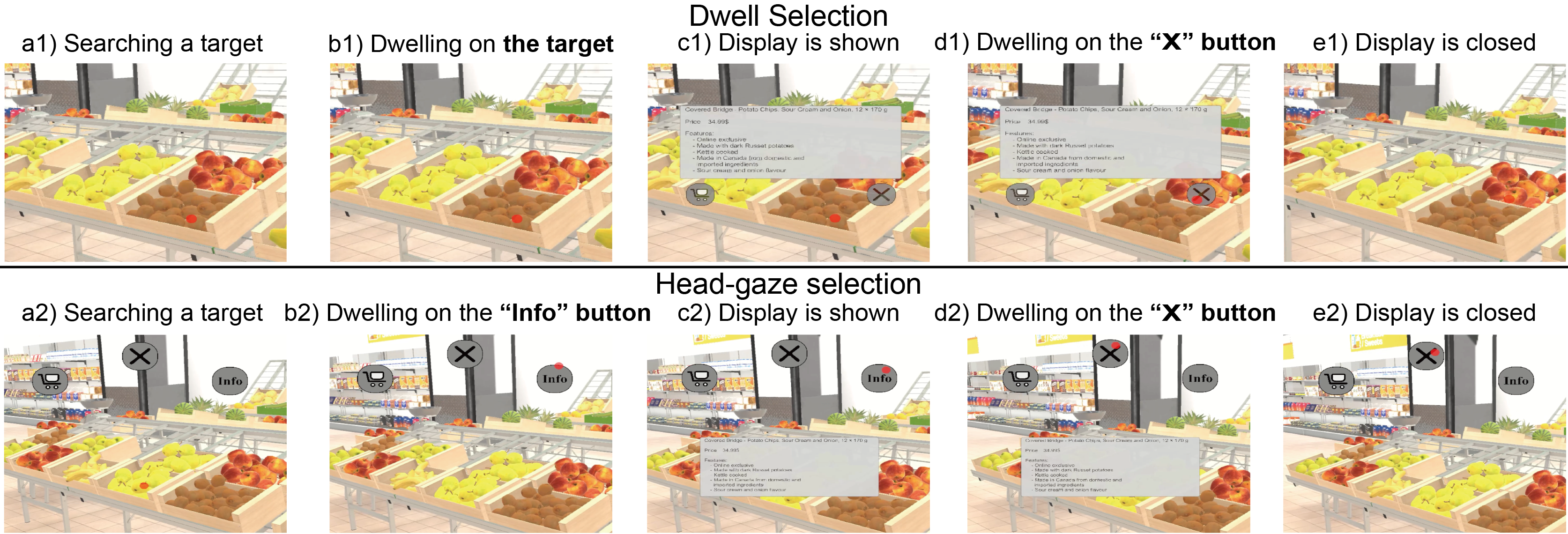

Dwell Time Reduction Technique using Fitts' Law for Gaze-Based Target Acquisition

In dwell-based techniques, a user acquires an object by dwelling (i.e., looking at an object for a sufficiently long time; this time is referred to as dwell time). Using a short dwell time, users can acquire a target fast; however, unintentional manipulation tends to occur. To address this challenge, we adopt Fitts' Law for dwell-based technique. As a result of a user study, we found our technique achieved an average dwell time of 86.7 ms was achieved, with a 10.0% Midas-touch rate.

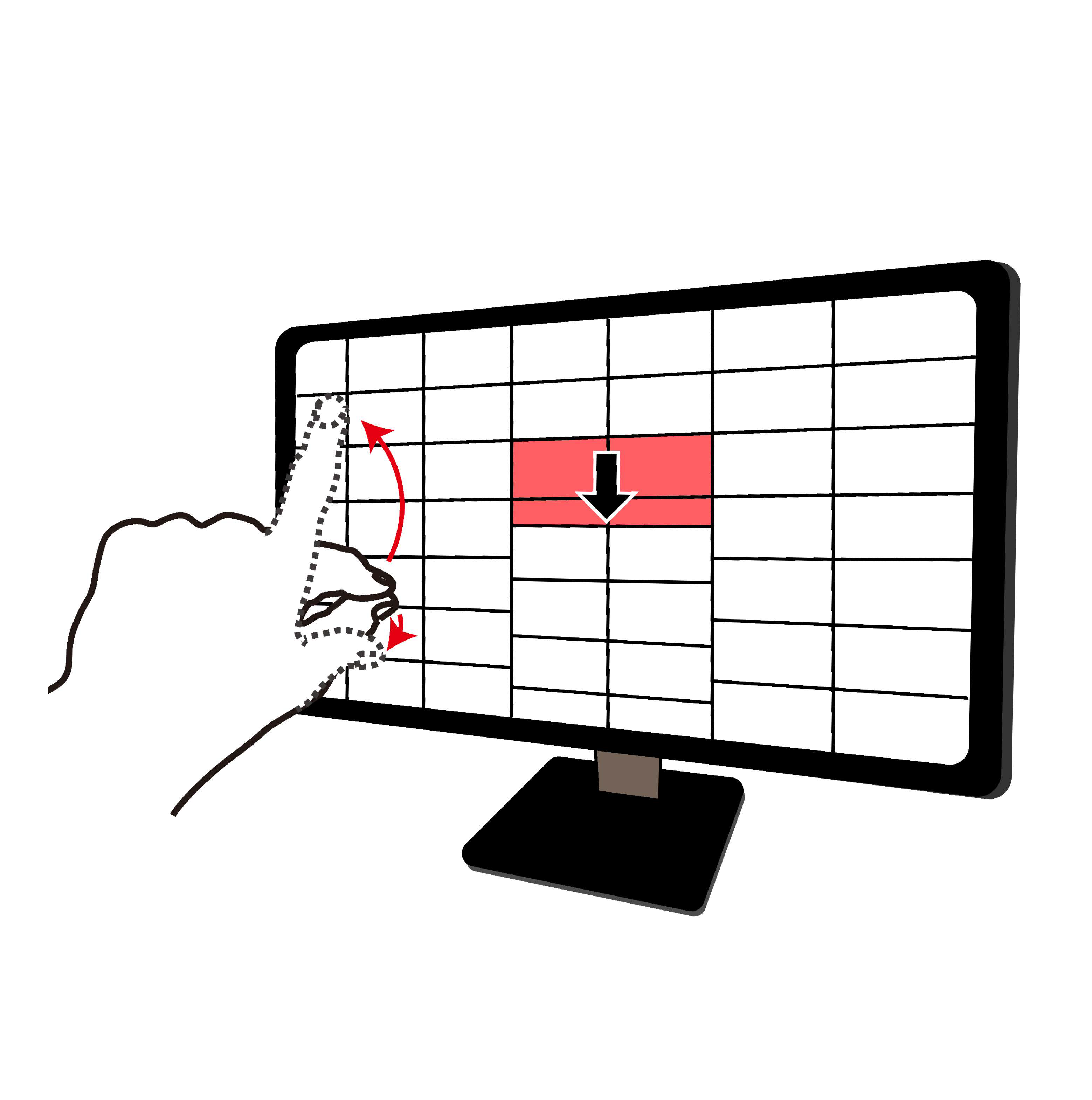

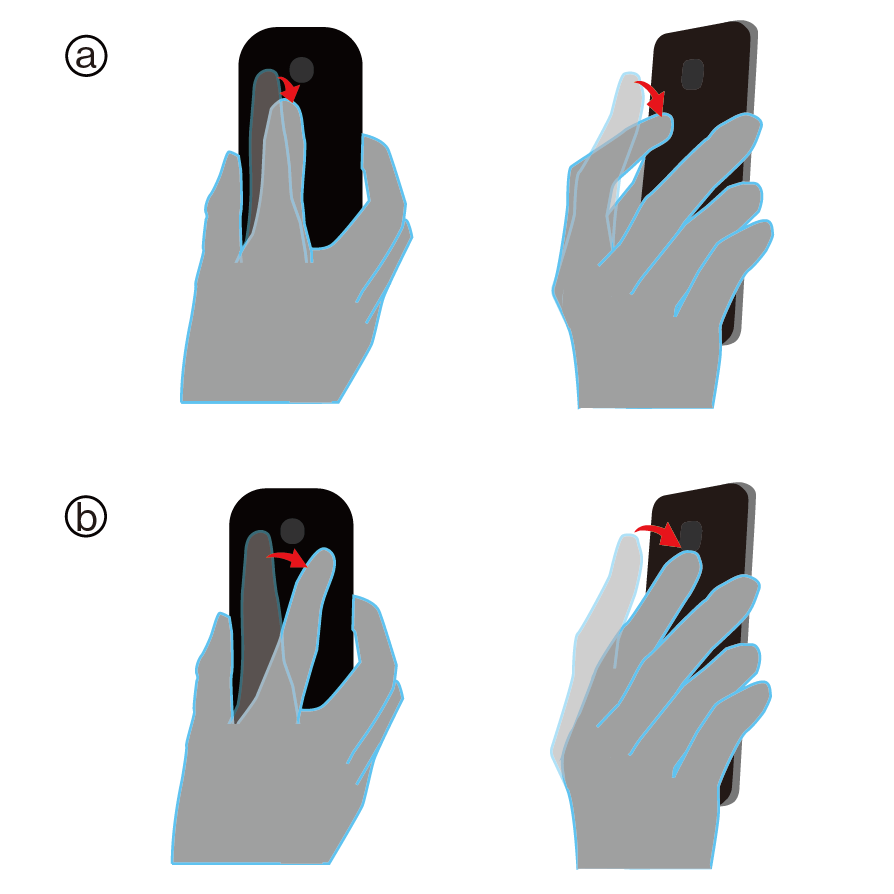

Pressure and Touch Area Based One-handed Interaction Technique Using Cursor for Large Smartphones

In this study, we propose the technique that enables the user to interact with a large smartphone with one hand without changing the grip. In our technique, the user operates the unreachable area using a cursor. The cursor appears and moves based on the user's touch on the touchscreen. As the triggering method, we use large touch, which is a touch gesture using a large area of the finger, for example, the pad of the thumb. In our technique, a touch-down event occurs at the cursor position when the user increases the touch pressure; a touch-up event occurs at the cursor position when the user decreases the touch pressure. With this approach, the user can input a single-touch gesture, which is a gesture performed only with a finger, such as a tap, a drag, a double tap, and a swipe, using the cursor.

A Design of Eyes-Free Kana Entry Method Utilizing Single Stroke for Mobile Devices

Our goal is to realize eyes-free text entry even in public places, using the mobile devices that people habitually carry. We designed an eyes-free Japanese Kana entry method for mobile devices that only requires users to make single strokes. In our method, users enter a Kana with a single stroke.

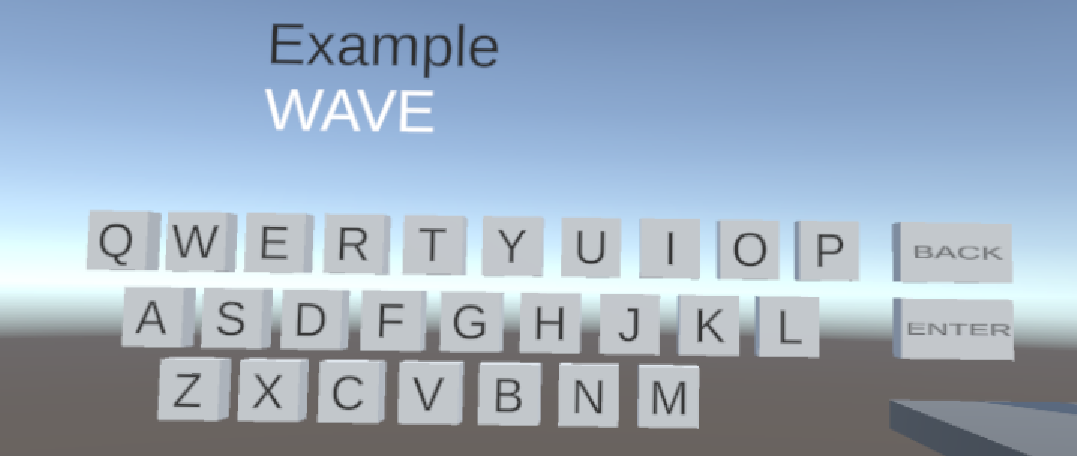

Cubic Keyboard for Virtual Reality

A virtual keyboard in VR, which is a planar keyboard, allows a user to type text while wearing an HMD. The user can select a key on a virtual keyboard by aiming a ray extended from a VR controller at a key and pressing a button. However, the virtual keyboard is a QWERTY keyboard with wide width and floats in front of the user, and thus it occupies a large part of the user's field of view. In contrast to the virtual keyboards that use only the 2D space of VR environments, we have proposed a cubic keyboard that users the 3D space of VR environments to reduce the occupancy of the user's field of view. The keyboard consists of 27 keys arranged in a 3 × 3 × 3 (vertical, horizontal, and depth) 3D array, where all 26 alphabet characters are assigned to the 26 keys except the center. Using our stroke-based text entry method with 3D movement, the user can enter one word per stroke.

We propose a left–right hand distinction system on the touchpad of a laptop computer.

The system uses a mirror and a front-facing camera of the laptop computer to obtain the image around the keyboard and the touchpad. The system distinguishes the left and right of a user's hand by image processing the obtained image. Therefore, our proposed system has a feature that it works without additional sensors such as Leap Motion and Kinect.

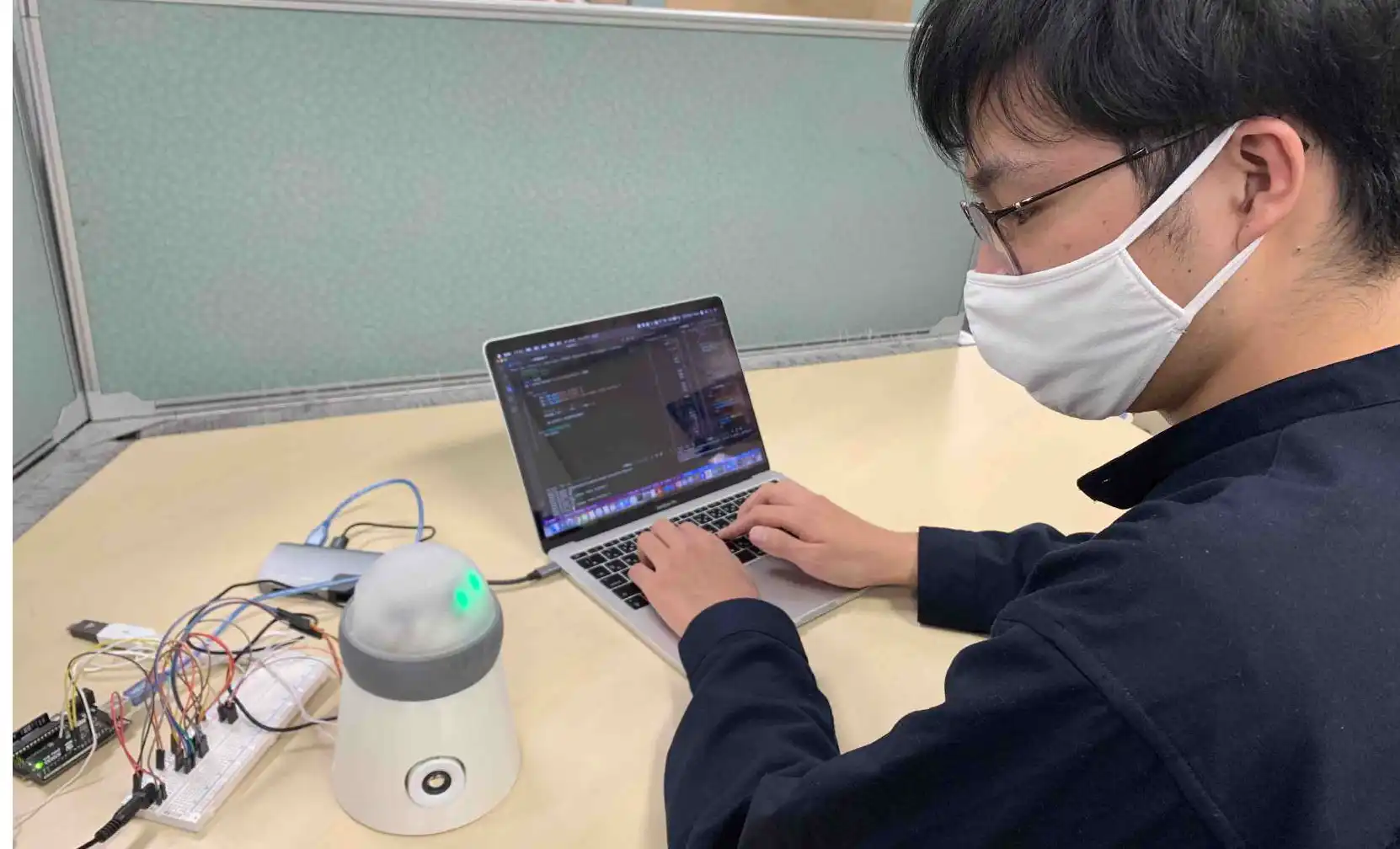

Supporting the Initiation of Remote Conversation by Presenting Gaze-based Awareness Information

It is difficult to initiate conversations between remote people. A lack of non-verbal information in video calls contributes to the difficulty to initiate conversations. In face-to-face situations, conversations are initiated by exchanging gaze information, especially mutual gaze. However, the mutual gaze is not established between remote locations. In this study, we proposed the voice call system with robots to initiate conversations between remote people by exchanging gaze information. The results of the evaluation showed that proposed system was effective in reducing the psychological burden of initiating conversations between remote people.

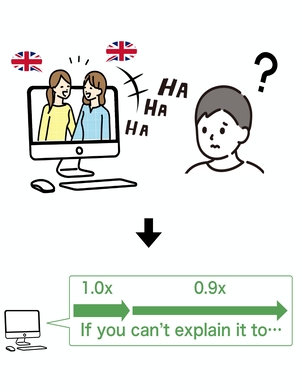

Multi-party Video Conferencing system with Gaze Cues Representation for Turn-taking

In a multi-party video conference, it is more difficult to achieve smooth turn-taking than in a face-to-face communication. This is probably because gaze cues is not shared in multi-party video conference. In this research, we propose a system to facilitate the turn-taking by sharing the gaze cues in a multi-party video conference. We implemented video conferencing systems that use arrows and modification of the video window size to share the gaze cues as in face-to-face communication. We also conducted an experiment to investigate the effect of the system on turn-taking. The result of the experiment suggested that our system could facilitate the turn-taking and the communication.